Monday, December 22, 2014

Computing in Science, including a walk down memory lane

One of the bigger changes in science over the past 20+ years has been the increasing importance of computing. That's probably not a surprise to anyone who has been paying attention, but some of the changes go beyond what one might expect.

Computers have been used to collect and analyze data for way more than 20 years. When I was in graduate school, our experiment (the Mark II detector at PEP) was controlled by a computer, as were all large experiments. The data was written on 6250 bpi [bits per inch] 9-track magnetic tapes. These tapes were about the size and weight of a small laptop, and were written or read by a reader the size of a small refrigerator. Each tape could hold a whopping 140 megabytes. This data was transferred to the central computing via a fast network - a motor scooter with a hand basket. It was a short drive, and, if you packed the basket tightly enough, the data rate was quite high. The data was then analyzed on a central cluster of a few large IBM mainframes.

At the time, theorists were also starting to use computers, and the first programs for symbolic manipulation (computer algebra) were becoming available. Their use has exploded over the past 20 years, and now most theoretical calculations require computers. For complex theories like quantum chromodynamics (QCD), the calculations are way too complicated for pencil and paper. In fact, the only way that we know to calculate QCD parameters manifestly requires large-scale computing. It is called lattice gauge theory. It divides space-time into cells, and uses sophisticated relaxation methods to calculate configurations of quarks and gluons on that lattice. Lattice gauge theory has been around since the 1970's, but only in the last decade or so have computers become powerful enough to complete calculations using physically realistic parameters.

Lattice gauge theory is really the tip of the iceberg, though. Computer simulations have become so powerful and so ubiquitous that they have really become a third approach to physics, alongside theory and experiment. For example, one major area of nuclear/particle physics research is developing codes that can simulate high-energy collisions. These collisions are so complicated, with so many competing phenomena present, that they cannot be understood from first principles. For example, heavy-ion studies at the Relativistic Heavy Ion Collider (RHIC) and CERN's Large Hadron Collider (LHC), when two ions collide, the number of nucleon (proton or neutron) on nucleon collisions depends on the geometry of the collision. The nucleon-nucleon collisions produce a number of quarks and gluons, which then interact with each other. Eventually, the quarks and gluons form hadrons (mesons, composed of a quark and an antiquark, and baryons, made of three quarks). These hadrons then interact for a while, and, eventually, fly apart. During both interaction phases, both short and long-ranged forces are in play, and it appears that a certain component of the interactions may be better described using hydrodynamics (treating the interacting matter as a liquid) than in the more traditional particle interaction sense. A large number of computer codes have been developed to simulate these interactions, each emphasizing different aspects of the interactions. A cottage industry has developed to compare the codes, and use them to make predictions for new types of observables, and to expand their range of applicability. Similar comments apply, broadly, to many other areas of physics, particularly including solid state physics, like semiconductors, superconductivity, and nano-physics.

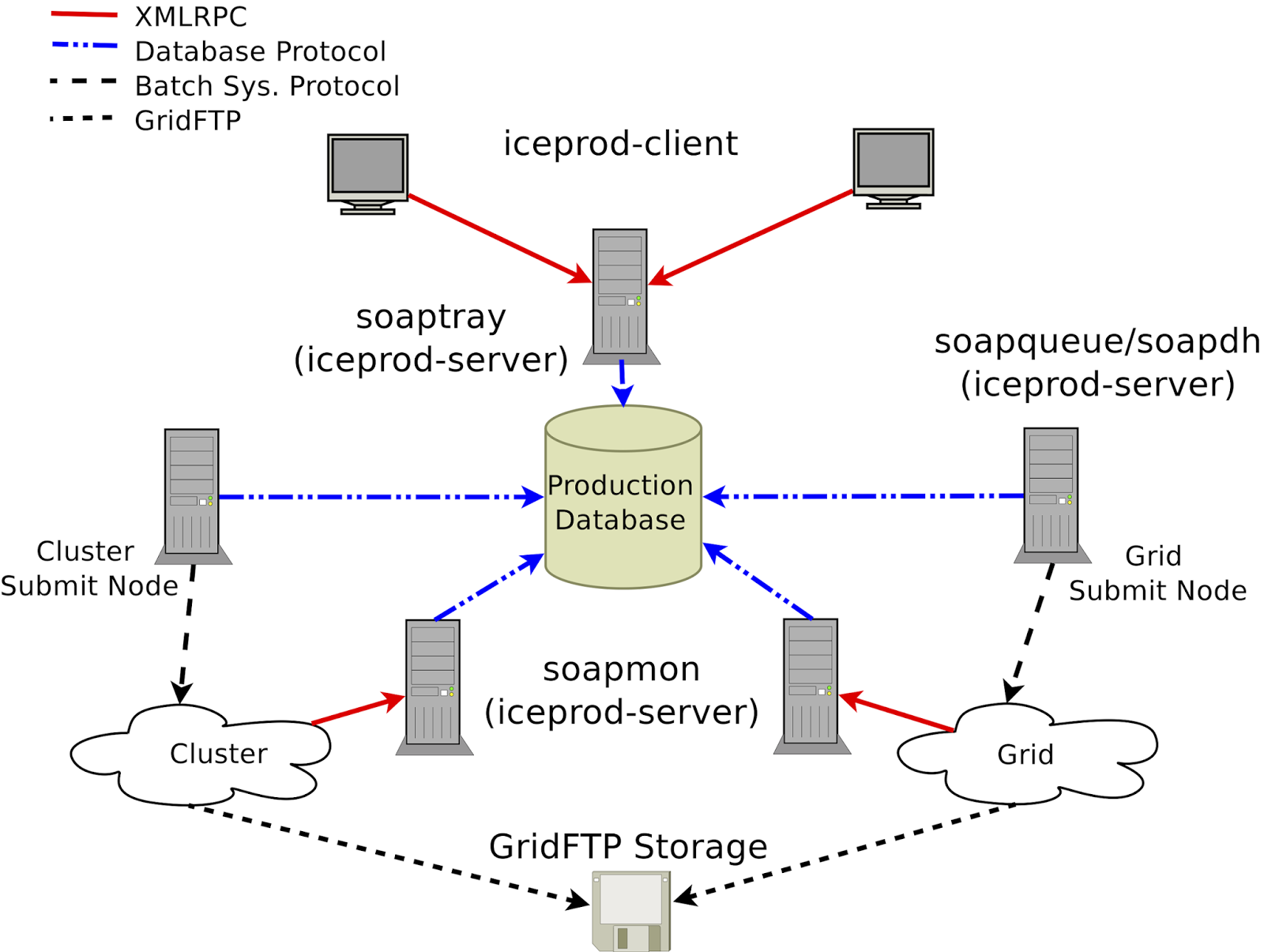

Of course, computing is also important for Antarctic neutrino experiments. IceCube uses computers for data collection (each Digital Optical Module (DOM) contains an embedded CPU) and event selection and reconstruction, and, particularly, for detector simulation. The hardest part of the simulation involves propagating photons through the ice, from the charge particle that creates them to the DOMs. Here, we need good models of how light scatters and is absorbed in the ice; developing and improving these models has been an ongoing endeavor over the past decade. Lisa Gerhardt, Juan Carlos Diaz Velez and I have written an article discussing IceCube's ongoing challenges, "Adventures in Antarctic Computing, or How I Learned to Stop Worrying and Love the Neutrino," which appeared in the September issue of IEEE Computer. I may be biased, but I think it's a really interesting article, which discusses some of the diverse computing challenges that IceCube faces. In addition to the algorithmic challenges, it also discusses the challenges inherent to the scale of IceCube computing, where we use computer clusters all over the world - hence the diagram as the top of the post.

Monday, November 17, 2014

PINGU - which neutrino is heaviest?

To continue with a theme from an earlier post - neutrino oscillations, we have learned much about neutrino physics over the past decade. They have mass, and can oscillate from one into another. We know the mass differences, and we know the relative oscillation probabilities (mixing angles). But, there are still a couple of very important open questions:

1) Are neutrinos their own antiparticles? This would mean that they are a type of particle known as "Majorana particles."

2) Do neutrinos violate CP (charge-parity) conservation?

3) What is the mass of the lightest neutrino? We know the mass differences, but less about the absolute scale - it is less than of order 1 electron volt, but it could be 0.1 electron Volt or 1 nanoelectron volt.

Experiments to answer these questions are large and difficult, with some proposed efforts costing more than a billion dollars and taking more than a decade to build,. and another decade to collect data

However, IceCube has pointed the way to relatively cheaply answer the third question. We can do this by building an infill arrray, called PINGU (Precision IceCube Next Generation Upgrade), which will include a much denser array of photo sensors, and so be sensitive to neutrinos with energies of a few GeV - an order of magnitude lower than in IceCubes existing DeepCore infill array.

PINGU will precisely measure the oscillations of atmospheric neutrinos as they travel through the Earth. Oscillations happen equally well in a vacuum, as in dense material. However, low-energy electron-flavored neutrinos (with energies of a few GeV) will also weakly and collectively scatter from the electrons in the Earth as they travel through it. This will shift how they oscillate. Importantly, the shift depends on whether the lightest neutrino is mostly the electron neutrino, or mostly other flavors. I said 'mostly' here because, although neutrinos are produced as electron-, muon- or tau- neutrinos, because of oscillations, as they travel through they Earth, they travel as a mixture of these states. But, one of the states is mostly electron neutrinos, and we'd like to know if it is the lightest state or not. The graphic (top) shows the probability of oscillation vs. neutrino energy and zenith angle (angle that it emerges from the Earth) for the two possible hierarchies. "Normal" means that the mostly-electron neutrino is the lightest, while "Inverted" means that its not. The oscillogram is taken from the PINGU Letter of Intent; similar plots have been made by a number of people.

We think that we can make this measurement with another 40 strings of digital optical modules, spread over an area smaller than DeepCore. Standalone, this will cost roughly $100M (less if it is built in tandem with a high-energy extension for IceCube), and take 3 years of installation. Data taking will take another 3 years or so.

It is worth pointing out that this measurement will also boost efforts to study question 1 via neutrinoless double beta decay, a reaction in which an atomic nucleus decays by converting two protons into neutrons, while emitting two electrons, and no neutrinos. The comparable process, two-neutrino double beta decay, involves the emission of two electrons and two neutrinos. This has been observed, but the neutrinoless version has not. The lack of neutrino emission can be inferred by the absence of missing energy. The expected rate of neutrinoless double beta decays depends on which neutrino is heaviest, among other things, so knowing the answer to question 3 will help provide a definitive answer to question 1.

Thursday, October 23, 2014

Looking ahead - is bigger better?

Now that IceCube has a few years of data under our belt, it is natural to size up where we are, and where we want to go.

Where we are is pretty clear. We have observed a clear astrophysical neutrino signal, with a spectral index most likely in the 2.3-2.5 range, so the neutrino flux goes as dN_nu/dE_nu ~ E_nu^-2.3. However, we have seen no sign of a point source signal (either continuous or transient), so we do not yet know of any specific neutrino sources. Dedicated searches for neutrinos in coincidence with gamma-ray bursts (GRBs) have largely (but not completely) eliminated GRBs as the source of the observed neutrinos. Although we continue to collect statistics, we have enough data now that the only way that we could observe a statistically significant point source signal in the near future would be if a powerful transient source appears. It is likely that IceCube is too small to observe point sources.

This observation of point sources is a natural focus for a next-generation instrument. ARIANNA (or another radio-neutrino detector) is clearly of high interest, but probably has an energy threshold too high to study the class of cosmic neutrinos seen by IceCube. So, there growing interest in an optical Cherenkov detector like IceCube, but 10 times bigger. It wouldn't be possible to deploy 800 strings in a reasonable time (or at a reasonable cost), so most of the preliminary designs involve of order 100 strings of optical sensors with spacings larger than the 125 m prevalent in IceCube - 254 to 360 m are popular separations. It would have better optical sensors than were available when we built IceCube.

This detector would necessarily have a higher energy threshold than IceCube, but would be well-matched to the astrophysical neutrino signal spectrum. Clearly, it would do a much better job of measuring the neutrino energy spectrum than IceCube did.

But, the key question is whether it is sensitive enough to have a good chance of seeing point sources. There are many calculations which attempt to answer this question. Unfortunately, the results depend on the assumptions about the distribution of sources in the universe, and their strengths and energy spectra, and, at least so far, we cannot demonstrate this convincingly. So, stay tuned.

Of course, an alternative direction is toward an infill array with even higher density than PINGU. This is at a more advanced stage of design, and will be covered in my next post.

Where we are is pretty clear. We have observed a clear astrophysical neutrino signal, with a spectral index most likely in the 2.3-2.5 range, so the neutrino flux goes as dN_nu/dE_nu ~ E_nu^-2.3. However, we have seen no sign of a point source signal (either continuous or transient), so we do not yet know of any specific neutrino sources. Dedicated searches for neutrinos in coincidence with gamma-ray bursts (GRBs) have largely (but not completely) eliminated GRBs as the source of the observed neutrinos. Although we continue to collect statistics, we have enough data now that the only way that we could observe a statistically significant point source signal in the near future would be if a powerful transient source appears. It is likely that IceCube is too small to observe point sources.

This observation of point sources is a natural focus for a next-generation instrument. ARIANNA (or another radio-neutrino detector) is clearly of high interest, but probably has an energy threshold too high to study the class of cosmic neutrinos seen by IceCube. So, there growing interest in an optical Cherenkov detector like IceCube, but 10 times bigger. It wouldn't be possible to deploy 800 strings in a reasonable time (or at a reasonable cost), so most of the preliminary designs involve of order 100 strings of optical sensors with spacings larger than the 125 m prevalent in IceCube - 254 to 360 m are popular separations. It would have better optical sensors than were available when we built IceCube.

This detector would necessarily have a higher energy threshold than IceCube, but would be well-matched to the astrophysical neutrino signal spectrum. Clearly, it would do a much better job of measuring the neutrino energy spectrum than IceCube did.

But, the key question is whether it is sensitive enough to have a good chance of seeing point sources. There are many calculations which attempt to answer this question. Unfortunately, the results depend on the assumptions about the distribution of sources in the universe, and their strengths and energy spectra, and, at least so far, we cannot demonstrate this convincingly. So, stay tuned.

Of course, an alternative direction is toward an infill array with even higher density than PINGU. This is at a more advanced stage of design, and will be covered in my next post.

Monday, October 13, 2014

Now you see them, now you don't - oscillating neutrinos

One of the most interesting particle physics discoveries over the past 20 years has been that neutrinos oscillate. That is, they change from one flavor into another. For example, an electron neutrino (produced in associate with an electron) may, upon travelling some distance, interact as if it was a muon neutrino. If you have a detector that is only sensitive to one flavor (type) of neutrino, it may appear as if neutrinos are disappearing.

This oscillation is important for a number of reasons. First, it shows that neutrinos can mix - that the states that propagate through space are not exactly the same states that appear when they are created or interact. This is one of those weird quantum mechanical puzzles, in that you should use different basis (set of possible states) to describe neutrinos as they are created or interact, then when they are propagating through space.

The second thing is that it requires that neutrinos have mass, since it is the different mass that differentiates the states that propagate through space. Many, many papers have been written about how this can work; Harry Lipkin has written a nice article that focuses on the interesting quantum mechanics. The presence of neutrino masses is often considered one of the first clear signs of physics beyond the 'standard model' of particle physics (dark matter and dark energy) are two other signs.

There are two important parameters in neutrino oscillations. The first is the mixing angle (do the neutrinos mix perfectly, or only a little bit), and the second is the square of the mass difference (\delta m^2), which controls how long it takes for the oscillations to occur; the conversion probability scales as sin^2(\delta m^2*L/4E) where L is the distance traveled, and E is the energy. Of course, since there are three neutrino flavors (electron, muon and tau), there is more than one \delta m^2 and mixing angle.

Interestingly, neutrino oscillations were discovered almost entirely by astroparticle physicists, rather than at accelerators. The first clue came from a gold mine in Lead, South Dakota. There, in the late 1960's,Ray Davis and colleagues placed a 100,000 gallon tank of perchloroethylene (a dry cleaning fluid) 1500 meters underground. Theory predicted that neutrinos produced by nuclear fusion in the sun would turn a couple of chlorine atoms a day into argon. Through careful chemistry, these argon atoms could be collected and counted. David found only about 1/3 as many argon atoms as expected. Needless to say, this was a very difficult experiment, and it took years of data collection and careful checks, and further experiments (see below) before the discrepancy was accepted. The photo at the top of this post shows Davis swimming in the 300,000 gallon water tanks that served as a shield for the perchloroethylene.

The next step forward was by the Super-Kamiokande experiment in Japan, also underground. In 1998, they published a paper comparing the rate of upward-going and downward-going muons from muon-neutrino interactions. The found a deficit of upward-going events which they described as consistent with neutrino oscillations, with the downward-going events (which had traveled just a short distance, and so presumably were un-oscillated) serving as a reference.

The third major observation was the by SNO collaboration, who deployed 1,000 tons of heavy water (D_2O) in a mine in Sudbury, Ontario, Canada. The deuterium in heavy water has one fairly unique property, in that it is undergoes a reaction that is sensitive to all three neutrino flavors. SNO measured the total flux of neutrinos from the Sun, and found that it was as expected. In contrast, and in agreement with Ray Davis experiment, the flux of electron neutrinos was only 1/2 to 1/3 of that expected (the exact number depends on the energy range). So, the missing electron neutrinos were not disappearing; they were turning into another flavor of neutrinos.

Some years later, the Kamland collaboration performed an experiment to observe the oscillations from neutrinos that were produced in nuclear reactors, further confirming the observation.

Now, IceCube has also made measurements of neutrino oscillations, using the same atmospheric neutrinos used by Super-Kamiokande. Although it is not easy for IceCube to reach down low enough in energy for these studies, we have the advantage of having an enormous sensitive area, so that we can quickly gather enough data for a precision result. The plot below shows a recent IceCube result. The top plot shows IceCube data, as a function of L/E; L is determined from the zenith angle of the event, which is used to calculate how far the neutrino propagated through the Earth. The data is compared with two curves, on showing the expectations without oscillations (red curve), and the second a best-fit, which returns mixing angles and \delta m^2 compatible with other experiments. Although our measurement is not yet quite as powerful as some other experiments, we are quickly collecting more data, and also working on improving our understanding of the detector; the latter is accounted for in the systematic errors. The bottom plot is just the ratio of the data to the no-oscillation expectation; no-oscillations are clearly ruled out.

This oscillation is important for a number of reasons. First, it shows that neutrinos can mix - that the states that propagate through space are not exactly the same states that appear when they are created or interact. This is one of those weird quantum mechanical puzzles, in that you should use different basis (set of possible states) to describe neutrinos as they are created or interact, then when they are propagating through space.

The second thing is that it requires that neutrinos have mass, since it is the different mass that differentiates the states that propagate through space. Many, many papers have been written about how this can work; Harry Lipkin has written a nice article that focuses on the interesting quantum mechanics. The presence of neutrino masses is often considered one of the first clear signs of physics beyond the 'standard model' of particle physics (dark matter and dark energy) are two other signs.

There are two important parameters in neutrino oscillations. The first is the mixing angle (do the neutrinos mix perfectly, or only a little bit), and the second is the square of the mass difference (\delta m^2), which controls how long it takes for the oscillations to occur; the conversion probability scales as sin^2(\delta m^2*L/4E) where L is the distance traveled, and E is the energy. Of course, since there are three neutrino flavors (electron, muon and tau), there is more than one \delta m^2 and mixing angle.

Interestingly, neutrino oscillations were discovered almost entirely by astroparticle physicists, rather than at accelerators. The first clue came from a gold mine in Lead, South Dakota. There, in the late 1960's,Ray Davis and colleagues placed a 100,000 gallon tank of perchloroethylene (a dry cleaning fluid) 1500 meters underground. Theory predicted that neutrinos produced by nuclear fusion in the sun would turn a couple of chlorine atoms a day into argon. Through careful chemistry, these argon atoms could be collected and counted. David found only about 1/3 as many argon atoms as expected. Needless to say, this was a very difficult experiment, and it took years of data collection and careful checks, and further experiments (see below) before the discrepancy was accepted. The photo at the top of this post shows Davis swimming in the 300,000 gallon water tanks that served as a shield for the perchloroethylene.

The next step forward was by the Super-Kamiokande experiment in Japan, also underground. In 1998, they published a paper comparing the rate of upward-going and downward-going muons from muon-neutrino interactions. The found a deficit of upward-going events which they described as consistent with neutrino oscillations, with the downward-going events (which had traveled just a short distance, and so presumably were un-oscillated) serving as a reference.

The third major observation was the by SNO collaboration, who deployed 1,000 tons of heavy water (D_2O) in a mine in Sudbury, Ontario, Canada. The deuterium in heavy water has one fairly unique property, in that it is undergoes a reaction that is sensitive to all three neutrino flavors. SNO measured the total flux of neutrinos from the Sun, and found that it was as expected. In contrast, and in agreement with Ray Davis experiment, the flux of electron neutrinos was only 1/2 to 1/3 of that expected (the exact number depends on the energy range). So, the missing electron neutrinos were not disappearing; they were turning into another flavor of neutrinos.

Some years later, the Kamland collaboration performed an experiment to observe the oscillations from neutrinos that were produced in nuclear reactors, further confirming the observation.

Now, IceCube has also made measurements of neutrino oscillations, using the same atmospheric neutrinos used by Super-Kamiokande. Although it is not easy for IceCube to reach down low enough in energy for these studies, we have the advantage of having an enormous sensitive area, so that we can quickly gather enough data for a precision result. The plot below shows a recent IceCube result. The top plot shows IceCube data, as a function of L/E; L is determined from the zenith angle of the event, which is used to calculate how far the neutrino propagated through the Earth. The data is compared with two curves, on showing the expectations without oscillations (red curve), and the second a best-fit, which returns mixing angles and \delta m^2 compatible with other experiments. Although our measurement is not yet quite as powerful as some other experiments, we are quickly collecting more data, and also working on improving our understanding of the detector; the latter is accounted for in the systematic errors. The bottom plot is just the ratio of the data to the no-oscillation expectation; no-oscillations are clearly ruled out.

Monday, August 25, 2014

Why do science?

While preparing for the Intuit TedX conference (see my last post), the organizers also asked one other hard question: why should we (i.e. nonscientists) care about neutrinos or astrophysics. Scientists do not like to hear these questions, but we ignore them at our peril - especially funding peril. The people of the United States are paying for much of our research through their taxes, and they have both a right and a good reason to ask these questions. Unfortunately, they are not easy to answer, at least for me. When asked, I usually reply along one of two lines, neither of them completely satisfying.

1) We don't know what this is useful for now, but it will likely be (or could be) useful one day. This is easily backed up by many examples of physical phenomena that were long considered totally irrelevant to everyday live, but are now part of everyday technology. Quantum mechanics and electromagnetism are two good examples, but perhaps the best is general relativity. General relativity was long considered only applicable to abstruse astrophysical phenomena, such as gravity near a black hole, but it is now an important correction for GPS receivers to produce accurate results. This is a strong argument for research that has a clear connection to modern technology. For IceCube and ARIANNA, whose main purpose is to track down astrophysical cosmic-ray particle accelerators, one can argue that understanding these cosmic accelerators may teach us how to make better (plasma powered?) accelerators here on Earth. There are many more examples of esoteric phenomena that remain just that, esoteric phenomena.

2) Science is interesting and important for its own sake. I believe this, and would venture to say that most scientists believe it. Many non-scientists also believe it. However, many do not, and, for the people who don't, I know of no good argument to make them change their mind. This view was nicely put forth by former Fermilab director Robert Wilson, who testified before congress in 1969. He gave the value of science as

"It only has to do with the respect with which we regard one another, the dignity of men, our love of culture. It has to do with those things.

It has nothing to do with the military. I am sorry."

When pressed about military or economic benefits (i.e. competing with the Russians), he continued:

"Only from a long-range point of view, of a developing technology. Otherwise, it has to do with: Are we good painters, good sculptors, great poets? I mean all the things that we really venerate and honor in our country and are patriotic about.

In that sense, this new knowledge has all to do with honor and country but it has nothing to do directly with defending our country except to help make it worth defending."

This argument puts science on an equal footing with the arts, music or poetry, endeavors that are largely supported by the public, whereas science is largely government funded.

Unfortunately, it is difficult to put these two arguments together in a way that they do not interfere with each other. I wish that these arguments were stronger, either individually or together. In short, I wrestle with this question a lot, and would welcome comments from scientists or nonscientists who have thought about how to answer this question.

--Spencer

1) We don't know what this is useful for now, but it will likely be (or could be) useful one day. This is easily backed up by many examples of physical phenomena that were long considered totally irrelevant to everyday live, but are now part of everyday technology. Quantum mechanics and electromagnetism are two good examples, but perhaps the best is general relativity. General relativity was long considered only applicable to abstruse astrophysical phenomena, such as gravity near a black hole, but it is now an important correction for GPS receivers to produce accurate results. This is a strong argument for research that has a clear connection to modern technology. For IceCube and ARIANNA, whose main purpose is to track down astrophysical cosmic-ray particle accelerators, one can argue that understanding these cosmic accelerators may teach us how to make better (plasma powered?) accelerators here on Earth. There are many more examples of esoteric phenomena that remain just that, esoteric phenomena.

2) Science is interesting and important for its own sake. I believe this, and would venture to say that most scientists believe it. Many non-scientists also believe it. However, many do not, and, for the people who don't, I know of no good argument to make them change their mind. This view was nicely put forth by former Fermilab director Robert Wilson, who testified before congress in 1969. He gave the value of science as

"It only has to do with the respect with which we regard one another, the dignity of men, our love of culture. It has to do with those things.

It has nothing to do with the military. I am sorry."

When pressed about military or economic benefits (i.e. competing with the Russians), he continued:

"Only from a long-range point of view, of a developing technology. Otherwise, it has to do with: Are we good painters, good sculptors, great poets? I mean all the things that we really venerate and honor in our country and are patriotic about.

In that sense, this new knowledge has all to do with honor and country but it has nothing to do directly with defending our country except to help make it worth defending."

This argument puts science on an equal footing with the arts, music or poetry, endeavors that are largely supported by the public, whereas science is largely government funded.

Unfortunately, it is difficult to put these two arguments together in a way that they do not interfere with each other. I wish that these arguments were stronger, either individually or together. In short, I wrestle with this question a lot, and would welcome comments from scientists or nonscientists who have thought about how to answer this question.

--Spencer

Wednesday, August 20, 2014

Christmas in Antarctica: Presenting science to the general public

I recently had a very interesting, but sobering experience - giving a TedX talk in Mountain View, at a conference sponsored by Intuit (yes, the TurboTax people; they also make accounting software). The audience was mostly Intuit employees, from diverse backgrounds - sofware engineers, accountants, HR people, PR people, etc. There were 15 speakers (+4 videos of previously presented TedX talks) - me, a geologist, and 13 non-scientists. Most were excellent, and it was interesting to watch the diverse presentation styles. I was upstaged by the following speaker - a 10 year old. She was the world champion in Brazilian ju-jitsu, and spoke eloquently about being a warrior. Other presenters included a magician, an ex-CNN anchor speaking on money management, a pianist, and a capoeira group.

In discussing my talk, the organizers emphasized a couple of things: tell a good story, and engage with the audience. These are two basics, but are way too rarely found in scientific presentations. Our material is important and interesting to us, so we automatically assume that the audience will feel the same way. This is not true! I'm as guilty as any other scientist here, and really appreciated being reminded of these basics, both by the organizers beforehand, and while listening to the other speakers.

Over the past few years, my kids have been studying 5-paragraph essays. I learned this too: an introduction with hypothesis, 3 arguments (1 paragraph each) and a concluding paragraph. My kids learned it a bit differently. The first paragraph must start with a 'grabber:' a sentence to grab the readers attention. Likewise, the organizers encouraged me to start with a grabber, to engage the audience, by asking about going someplace cold for the holidays. This led naturally to me to being in Antarctica for the winter holidays, and thence into ARIANNA and neutrino astronomy. This may be the back door into the science, but times have changed, and it seemed to work well.

As scientists, we need to remember these lessons when we talk to non-scientists. Why should the audience care? Are we speaking to them or at them? Do we have an engaging presentation, with a good, clear story line? Is it at a level that they can follow?

These principles also apply to scientific presentations. How often have we sat through a seminar without any idea why the material is important to anybody? Or one filled with incomprehensible jargon or page-long equations relating variables that are not clearly defined? These are clear ways to avoid having to deal with job offers, etc. We need to do better.

So, ... thanks to Intuit, and Kara de Frias and Kimchi Tyler Chen (the lead organizers) for arranging a very interesting and educational day

In discussing my talk, the organizers emphasized a couple of things: tell a good story, and engage with the audience. These are two basics, but are way too rarely found in scientific presentations. Our material is important and interesting to us, so we automatically assume that the audience will feel the same way. This is not true! I'm as guilty as any other scientist here, and really appreciated being reminded of these basics, both by the organizers beforehand, and while listening to the other speakers.

Over the past few years, my kids have been studying 5-paragraph essays. I learned this too: an introduction with hypothesis, 3 arguments (1 paragraph each) and a concluding paragraph. My kids learned it a bit differently. The first paragraph must start with a 'grabber:' a sentence to grab the readers attention. Likewise, the organizers encouraged me to start with a grabber, to engage the audience, by asking about going someplace cold for the holidays. This led naturally to me to being in Antarctica for the winter holidays, and thence into ARIANNA and neutrino astronomy. This may be the back door into the science, but times have changed, and it seemed to work well.

As scientists, we need to remember these lessons when we talk to non-scientists. Why should the audience care? Are we speaking to them or at them? Do we have an engaging presentation, with a good, clear story line? Is it at a level that they can follow?

These principles also apply to scientific presentations. How often have we sat through a seminar without any idea why the material is important to anybody? Or one filled with incomprehensible jargon or page-long equations relating variables that are not clearly defined? These are clear ways to avoid having to deal with job offers, etc. We need to do better.

So, ... thanks to Intuit, and Kara de Frias and Kimchi Tyler Chen (the lead organizers) for arranging a very interesting and educational day

Tuesday, July 22, 2014

The third times a charm... 5 sigma

My last several posts concerned the observation of extra-terrestrial neutrinos. The IceCube analysis, published in November, used 2 years of data to find evidence 'at the 4-sigma (standard deviation) level.' 4-sigma (standard deviations) refers to the statistical significance of the result. If the probabilities for our observation are described by a Gaussian ('normal') distribution, then 4-sigma corresponds to a roughly 1 in 16,000 probability of being a statistical fluctuation. This sounds like a pretty high standard, but, at least in physics, it is not normally considered enough to claim a discovery. There are two reasons for this.

The first is known as 'trials factors.' If you have a complex data set, and a powerful computer, it is pretty easy to do far more than 16,000 experiments, by, for example, looking in different regions of the sky, looking at different types of neutrinos, different energies, etc. Of course, within a single analysis, we keep track of the trial factors - in a search for point sources of neutrinos, we know how many different (independent) locations we are looking in, and how many energy bins, etc. However, this becomes harder when there are multiple analyses looking for different things. If we publish 9 null results and one positive one, should we dilute the 1 in 16,000 to 1 in 1,600?

In the broader world of science, it is well known that it is easier (and better for ones career) to publish positive results rather than upper limits. So, the positive results are more likely to be published than negative. Cold fusion (which made a big splash in the late 1980's) is a shining example of this. When it was first reported, many, many physics departments set up cells to search for cold fusion. Most of them didn't find anything, and quietly dropped the subject. However, the few that saw evidence for cold fusion published their results. The result was that a literature search could find almost as many positive results as negative ones. However, if you asked around, the ratio was very different.

The second reason is that the probability distribution may not be described by a Gaussian normal distribution, for any of a number of reasons. One concerns small numbers; if there are only a few events seen, then the distributions are better described by a Poisson distribution than a Gaussian. Of course, we know how to handle this now. The second reason is more nebulous - there may be systematic uncertainties that are not well described by a Gaussian distribution. Or, there may be unknown correlations between these systematic uncertainties. We try to account for these factors in assessing significance, but this is a rather difficult point.

Anyway, that long statistical digression explains why our November paper was entitled "Evidence for..." rather than "Discovery of..."

Now, we have released a new result, available at http://arxiv.org/abs/arXiv:1405.5303. The analysis has been expanded to include a third year of data, without any other changes. The additional year of data included another 9 events, including Big Bird. The data looked much like the first two years, and so pushed the statistical significance up to 5.7 sigma. Leading to the title "Observation of..." Of course, 5 sigma doesn't guarantee that a result is correct, but this one looks pretty solid.

With this result, we are now moving from trying to prove the existence of ultra-high energy astrophysical neutrinos to characterizing the flux and, from that, hopefully, finally pinning down the source(s) of the highest energy cosmic rays.

The first is known as 'trials factors.' If you have a complex data set, and a powerful computer, it is pretty easy to do far more than 16,000 experiments, by, for example, looking in different regions of the sky, looking at different types of neutrinos, different energies, etc. Of course, within a single analysis, we keep track of the trial factors - in a search for point sources of neutrinos, we know how many different (independent) locations we are looking in, and how many energy bins, etc. However, this becomes harder when there are multiple analyses looking for different things. If we publish 9 null results and one positive one, should we dilute the 1 in 16,000 to 1 in 1,600?

In the broader world of science, it is well known that it is easier (and better for ones career) to publish positive results rather than upper limits. So, the positive results are more likely to be published than negative. Cold fusion (which made a big splash in the late 1980's) is a shining example of this. When it was first reported, many, many physics departments set up cells to search for cold fusion. Most of them didn't find anything, and quietly dropped the subject. However, the few that saw evidence for cold fusion published their results. The result was that a literature search could find almost as many positive results as negative ones. However, if you asked around, the ratio was very different.

The second reason is that the probability distribution may not be described by a Gaussian normal distribution, for any of a number of reasons. One concerns small numbers; if there are only a few events seen, then the distributions are better described by a Poisson distribution than a Gaussian. Of course, we know how to handle this now. The second reason is more nebulous - there may be systematic uncertainties that are not well described by a Gaussian distribution. Or, there may be unknown correlations between these systematic uncertainties. We try to account for these factors in assessing significance, but this is a rather difficult point.

Anyway, that long statistical digression explains why our November paper was entitled "Evidence for..." rather than "Discovery of..."

Now, we have released a new result, available at http://arxiv.org/abs/arXiv:1405.5303. The analysis has been expanded to include a third year of data, without any other changes. The additional year of data included another 9 events, including Big Bird. The data looked much like the first two years, and so pushed the statistical significance up to 5.7 sigma. Leading to the title "Observation of..." Of course, 5 sigma doesn't guarantee that a result is correct, but this one looks pretty solid.

With this result, we are now moving from trying to prove the existence of ultra-high energy astrophysical neutrinos to characterizing the flux and, from that, hopefully, finally pinning down the source(s) of the highest energy cosmic rays.

Big Bird gets Energy

In a previous post, I wrote about our most energetic neutrino, Big Bird, but could not give you its energy. I am happy to report that the energy has now been made public: 2.0 PeV, within about 12% (assuming that it is an electromagnetic cascade). This is the most energetic neutrino yet seen. For comparison, the energy of each proton circulating in the LHC beams at full energy (which will not be achieved until later this year) is about 0.007 PeV, 285 times lower.

Subscribe to:

Posts (Atom)