Monday, December 22, 2014

Computing in Science, including a walk down memory lane

One of the bigger changes in science over the past 20+ years has been the increasing importance of computing. That's probably not a surprise to anyone who has been paying attention, but some of the changes go beyond what one might expect.

Computers have been used to collect and analyze data for way more than 20 years. When I was in graduate school, our experiment (the Mark II detector at PEP) was controlled by a computer, as were all large experiments. The data was written on 6250 bpi [bits per inch] 9-track magnetic tapes. These tapes were about the size and weight of a small laptop, and were written or read by a reader the size of a small refrigerator. Each tape could hold a whopping 140 megabytes. This data was transferred to the central computing via a fast network - a motor scooter with a hand basket. It was a short drive, and, if you packed the basket tightly enough, the data rate was quite high. The data was then analyzed on a central cluster of a few large IBM mainframes.

At the time, theorists were also starting to use computers, and the first programs for symbolic manipulation (computer algebra) were becoming available. Their use has exploded over the past 20 years, and now most theoretical calculations require computers. For complex theories like quantum chromodynamics (QCD), the calculations are way too complicated for pencil and paper. In fact, the only way that we know to calculate QCD parameters manifestly requires large-scale computing. It is called lattice gauge theory. It divides space-time into cells, and uses sophisticated relaxation methods to calculate configurations of quarks and gluons on that lattice. Lattice gauge theory has been around since the 1970's, but only in the last decade or so have computers become powerful enough to complete calculations using physically realistic parameters.

Lattice gauge theory is really the tip of the iceberg, though. Computer simulations have become so powerful and so ubiquitous that they have really become a third approach to physics, alongside theory and experiment. For example, one major area of nuclear/particle physics research is developing codes that can simulate high-energy collisions. These collisions are so complicated, with so many competing phenomena present, that they cannot be understood from first principles. For example, heavy-ion studies at the Relativistic Heavy Ion Collider (RHIC) and CERN's Large Hadron Collider (LHC), when two ions collide, the number of nucleon (proton or neutron) on nucleon collisions depends on the geometry of the collision. The nucleon-nucleon collisions produce a number of quarks and gluons, which then interact with each other. Eventually, the quarks and gluons form hadrons (mesons, composed of a quark and an antiquark, and baryons, made of three quarks). These hadrons then interact for a while, and, eventually, fly apart. During both interaction phases, both short and long-ranged forces are in play, and it appears that a certain component of the interactions may be better described using hydrodynamics (treating the interacting matter as a liquid) than in the more traditional particle interaction sense. A large number of computer codes have been developed to simulate these interactions, each emphasizing different aspects of the interactions. A cottage industry has developed to compare the codes, and use them to make predictions for new types of observables, and to expand their range of applicability. Similar comments apply, broadly, to many other areas of physics, particularly including solid state physics, like semiconductors, superconductivity, and nano-physics.

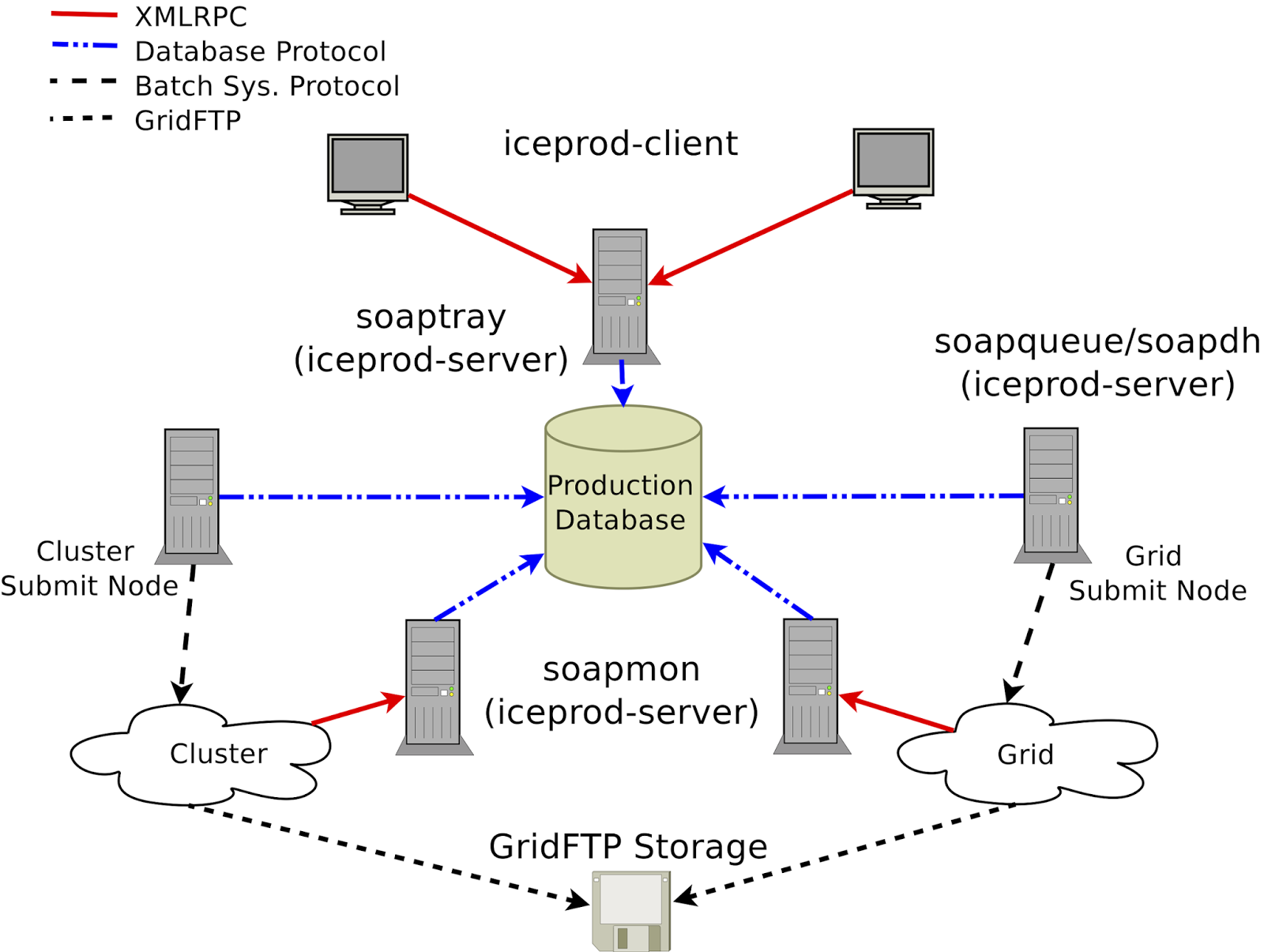

Of course, computing is also important for Antarctic neutrino experiments. IceCube uses computers for data collection (each Digital Optical Module (DOM) contains an embedded CPU) and event selection and reconstruction, and, particularly, for detector simulation. The hardest part of the simulation involves propagating photons through the ice, from the charge particle that creates them to the DOMs. Here, we need good models of how light scatters and is absorbed in the ice; developing and improving these models has been an ongoing endeavor over the past decade. Lisa Gerhardt, Juan Carlos Diaz Velez and I have written an article discussing IceCube's ongoing challenges, "Adventures in Antarctic Computing, or How I Learned to Stop Worrying and Love the Neutrino," which appeared in the September issue of IEEE Computer. I may be biased, but I think it's a really interesting article, which discusses some of the diverse computing challenges that IceCube faces. In addition to the algorithmic challenges, it also discusses the challenges inherent to the scale of IceCube computing, where we use computer clusters all over the world - hence the diagram as the top of the post.

Subscribe to:

Post Comments (Atom)

Very interesting,thanks for sharing such a good information.

ReplyDeleteดูบอลสด

ผลบอลเมื่อคืน

ผลบอลสด